Visual perception is not a passive reflection of the external world, but an active construction shaped by both sensory input and internal states. In this line of research, we investigate how top-down influences—such as attention, emotion, and goals—interact with bottom-up sensory signals to shape conscious visual experience.

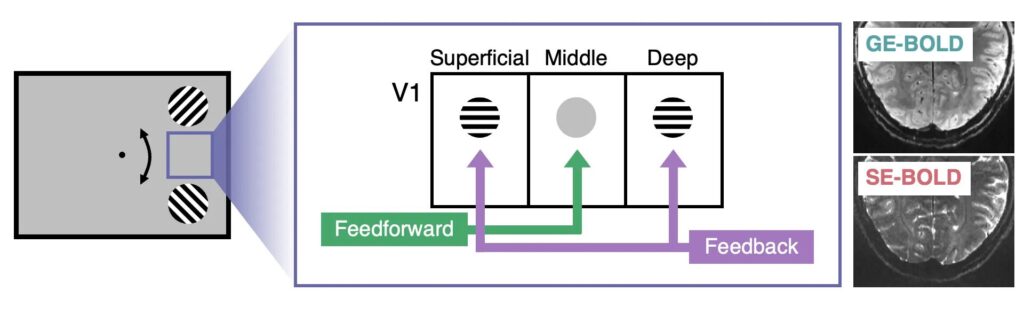

Laminar fMRI: Feedback and feedforward in visual cortex

Cortical layer-dependent feedback and feedforward signals during visual filling-in. Visual filling-in can evoke intermediate feature representations in the primary visual cortex (Chong et al., 2016) [Link]. Using ultra-high-field laminar fMRI and spin-echo (SE) BOLD signals with high spatial specificity, we examine how feedforward and feedback signals are distributed across cortical layers during this process.

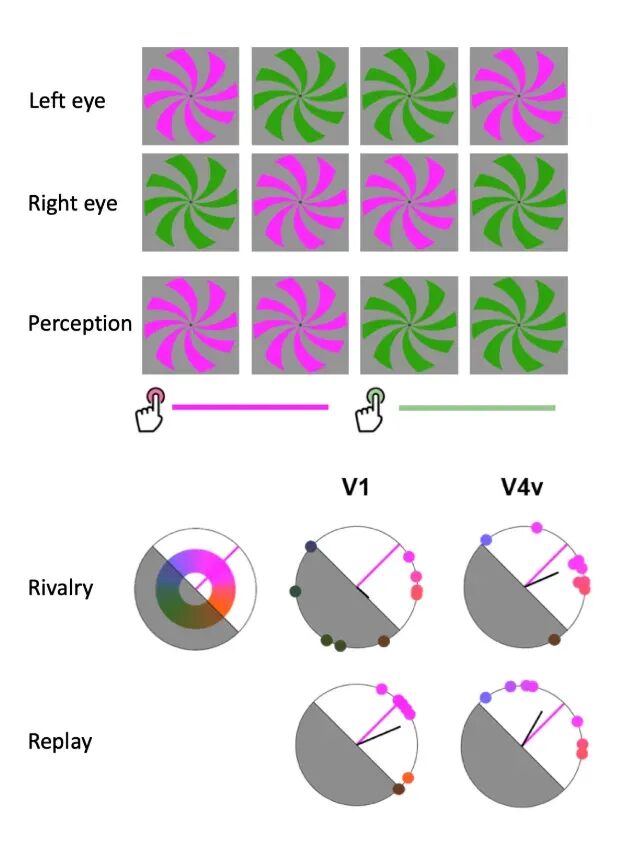

Perceptual color experience

We study how subjective perceptual experiences—percepts that are distinct from the retinal input—are represented across the visual hierarchy. By linking moment-to-moment color experience under interocular switch rivalry with population-level, color-selective responses across hierarchically organized cortical regions, we examine how neural representations that correspond to conscious perception emerge and evolve across the visual processing hierarchy.

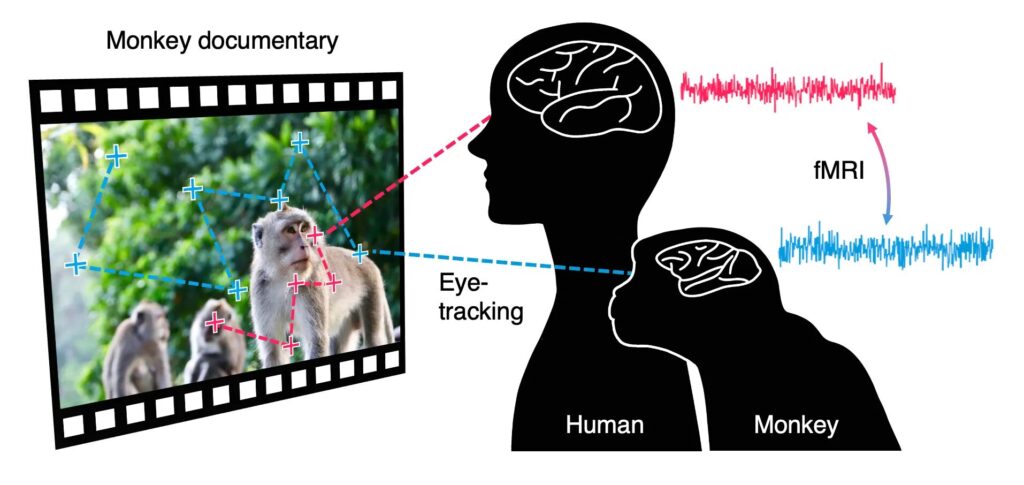

Cross-species comparison during naturalistic movie viewing

How similar—and where do they differ—are neural and behavioral responses in humans and monkeys during naturalistic movie viewing? While both species share comparable low-level audiovisual inputs, they may diverge in higher-level cognitive processes such as parsing social interactions and narrative structure. Using concurrent fMRI and eye-tracking during shared movie stimuli, we compare event processing across species and test how cross-species differences shape moment-to-moment visual perception and attention over time.

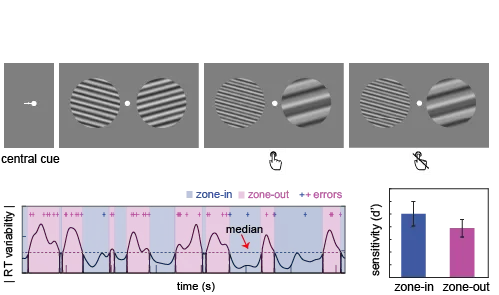

Dynamic neural modulation by time-varying attentional states

Do we perceive the same stimulus differently when “in the zone” vs. “zoning out”? Successful task performance depends on the ability to sustain attention over time, and prior work identifies alternating attentional states (“in the zone” / “out of the zone”). We test whether these attentional fluctuations modulate sensory representations in lower-level visual areas, and how such modulation relates to large-scale networks that track intrinsic attentional dynamics.

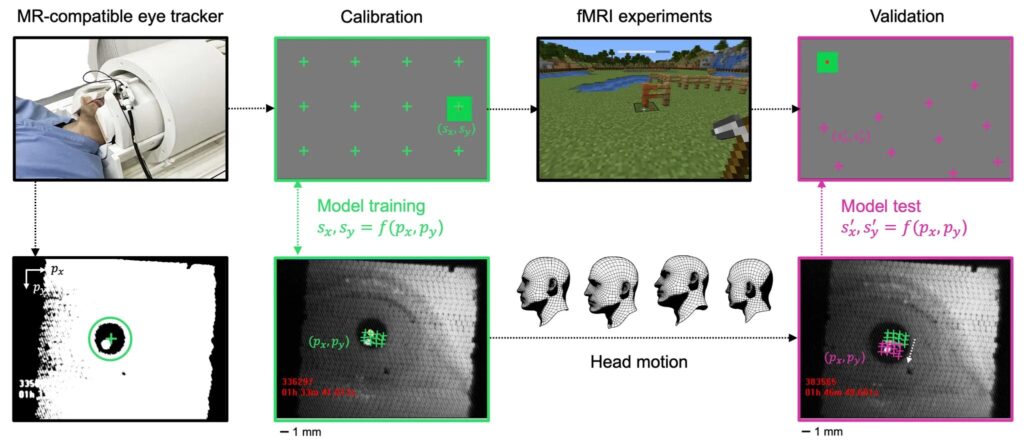

High-precision eye tracking in the scanner

Human eye movements are essential for understanding cognition, yet obtaining high-precision eye tracking in fMRI is challenging. Even small head shifts after calibration can introduce drift, degrading gaze accuracy. To address this, we developed Motion-Corrected Eye Tracking (MoCET), which corrects drift using head motion parameters from fMRI preprocessing.

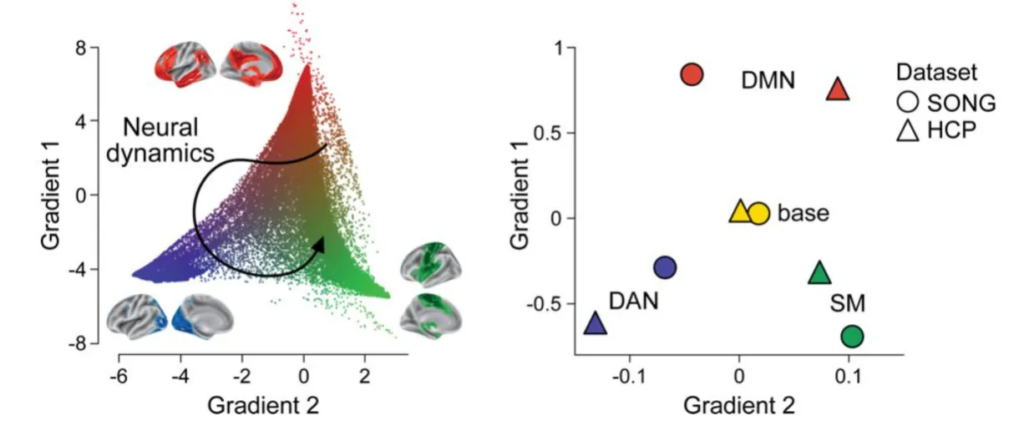

Cognitive and attentional state dynamics

The brain’s large-scale neural dynamics reflect ongoing changes in cognition and attention. Through multi-task and naturalistic neuroimaging combined with dense behavioral sampling, the project explores how these dynamics manifest across different situational contexts. We also extend this line of work to examine state dynamics in children with ADHD and ASD, investigating how neurodevelopmental conditions shape the repertoire and organization of whole-brain neural states.